From Cloud to Edge: The Next Era of Decentralized Software Development

For more than a decade, cloud computing has been the beating heart of modern software development, Every web app, mobile app, and enterprise system has relied on centralized cloud infrastructure to deliver speed, scalability, and global reach.

But as we step into 2030, the paradigm is shifting again. The cloud is no longer enough on its own — the future lies beyond it, in a world where computing happens closer to the user, not in distant data centers.

Welcome to the age of Edge Computing — the next evolution of decentralized software development.

This article explores how the transition from Cloud to Edge is changing the way developers build, deploy, and manage applications, and what it means for the future of intelligent, real-time software.

1. What Is Edge Computing?

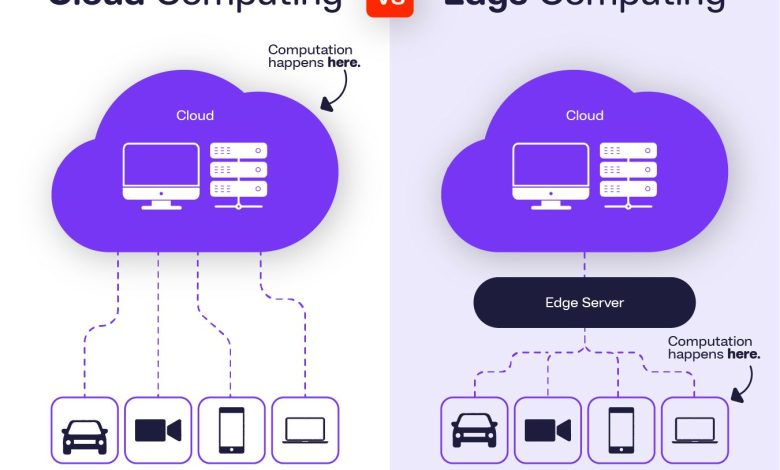

In traditional cloud models, data is processed in centralized servers (like AWS, Azure, or Google Cloud).

Edge computing flips that model: it processes data locally, near the source — whether that’s a smartphone, IoT sensor, autonomous vehicle, or local gateway.

Simple Definition:

Edge computing is the practice of processing data as close to its origin as possible, reducing latency and dependency on centralized infrastructure.

Instead of sending every request to a faraway server, edge-enabled apps handle much of the computation on-device or at the network’s edge.

This architecture is faster, more secure, and more efficient for modern, data-intensive applications.

2. Why the Shift from Cloud to Edge?

Cloud computing revolutionized scalability, but it introduced challenges:

- Latency: Even milliseconds matter in AR/VR, robotics, and real-time analytics.

- Bandwidth costs: Streaming massive data to the cloud isn’t sustainable.

- Privacy: Regulations like GDPR demand localized data processing.

- Offline operation: Many modern devices must work even without constant connectivity.

Edge computing addresses these problems by bringing intelligence closer to the action.

For developers, that means apps that respond instantly, handle sensitive data locally, and operate seamlessly even in unreliable network conditions.

3. The Edge Architecture: How It Works

A typical edge ecosystem involves multiple layers of computation working together:

| Layer | Role |

|---|---|

| Device Layer (Edge Nodes) | Smartphones, IoT devices, sensors performing initial data collection and computation. |

| Edge Gateway / Micro Data Center | Aggregates and processes local data before syncing with the cloud. |

| Cloud Layer | Performs large-scale data analytics, storage, and orchestration. |

This distributed model enables developers to balance speed, reliability, and scalability — using each layer for what it does best.

4. Technologies Powering Edge Development

The rise of edge computing is driven by several key technologies:

- 5G Networks: Ultra-low latency connectivity makes real-time edge operations possible.

- AI at the Edge: Lightweight neural networks (TinyML, TensorFlow Lite) enable local inference.

- Containers and Kubernetes: Microservices can now run efficiently on edge devices using tools like K3s and Docker Slim.

- WebAssembly (Wasm): Allows developers to run high-performance code across devices securely.

- Serverless at the Edge: Platforms like Cloudflare Workers, AWS Lambda@Edge, and Vercel Edge Functions are redefining how we deploy applications.

These technologies collectively form the Edge DevOps stack, making decentralized software development both powerful and accessible.

5. Benefits of Edge Computing for App Developers

The shift to edge isn’t just a trend — it’s a massive strategic advantage for developers and businesses.

a. Ultra-Low Latency

Apps like AR/VR, autonomous driving, and real-time gaming demand instant responses.

Processing data at the edge can reduce latency from 100ms to under 10ms.

b. Better Privacy and Compliance

Data never leaves the user’s device — critical for industries like healthcare, finance, and smart cities.

c. Cost Efficiency

Reducing cloud data transfers saves bandwidth costs and lowers carbon footprints.

d. Offline and Resilient Apps

Edge apps can operate independently of cloud connectivity — ensuring uptime even during outages.

e. Scalability Redefined

Instead of scaling vertically in a single data center, edge apps scale horizontally across millions of distributed devices.

6. Real-World Examples of Edge Computing

Edge computing is already transforming multiple industries:

| Industry | Edge Application |

|---|---|

| Automotive | Autonomous driving systems that make split-second decisions. |

| Healthcare | Real-time patient monitoring and diagnosis on wearable devices. |

| Retail | Smart inventory tracking and cashier-less checkouts. |

| Manufacturing | Predictive maintenance in factories via IoT sensors. |

| Telecom | Network optimization and content delivery via edge nodes. |

| Gaming | Real-time rendering and cloud gaming with near-zero lag. |

Companies like Tesla, Amazon, Siemens, and NVIDIA are already building edge-native solutions — paving the way for fully decentralized ecosystems.

7. Edge and AI: Intelligence at the Source

One of the most powerful trends of the 2030s will be the combination of Edge Computing and Artificial Intelligence.

Instead of sending data to the cloud for AI inference, AI models now run directly at the edge:

- A security camera identifies intruders locally.

- A smartwatch detects heart anomalies without internet access.

- A self-driving car analyzes its surroundings in milliseconds.

This synergy, known as Edge AI, allows for faster decisions, enhanced privacy, and real-time adaptability.

Developers can use frameworks like:

- TensorFlow Lite

- PyTorch Mobile

- ONNX Runtime Edge

- NVIDIA Jetson SDK

to deploy compact machine learning models optimized for edge hardware.

8. Challenges in Decentralized Development

Despite its promise, edge computing introduces new complexities:

a. Fragmentation

Unlike centralized clouds, edge devices vary widely in hardware, OS, and connectivity.

Developers must design flexible, adaptive software.

b. Security Risks

Each edge node becomes a potential attack vector.

Developers need strong encryption, identity management, and OTA (Over-the-Air) patching.

c. Deployment Complexity

Coordinating updates across millions of distributed nodes requires smart orchestration tools like KubeEdge and Open Horizon.

d. Debugging and Monitoring

Troubleshooting issues on remote, distributed hardware can be difficult — necessitating new observability tools tailored for edge environments.

9. The Rise of Edge-Native Development

Just as the 2010s birthed cloud-native apps, the 2030s will give rise to edge-native development — software designed from the ground up for distributed environments.

Principles of Edge-Native Development:

- Local-first architecture — prioritize edge computation.

- Intermittent connectivity tolerance — graceful offline fallback.

- Event-driven design — rely on local triggers and reactive patterns.

- Lightweight containers — minimal footprint for low-power devices.

- Zero-trust security — assume every node could be compromised.

Frameworks like EdgeX Foundry, Azure IoT Edge, and KubeEdge are shaping this new paradigm.

10. The Developer’s Role in the Edge Era

As computing decentralizes, developers will need to think differently.

They’ll evolve from cloud engineers to distributed system architects — balancing local and remote logic, optimizing for speed, and ensuring seamless data synchronization.

Essential Skills for the Edge Developer:

- Understanding network latency and bandwidth trade-offs

- Experience with container orchestration and serverless edge functions

- Familiarity with edge security standards (Zero Trust, PKI)

- Expertise in AI model compression and optimization

- Ability to design resilient distributed systems

In short: the developers who master the edge will define the next decade of digital innovation.

11. The Future: Cloud + Edge = Intelligent Continuum

The future of software isn’t about replacing the cloud — it’s about augmenting it.

Edge and cloud will work together in a continuum, dynamically shifting workloads based on performance, privacy, and cost.

Imagine:

- AI training happens in the cloud.

- AI inference runs at the edge.

- Analytics sync back periodically for continuous learning.

This hybrid model — sometimes called the “Edge-Cloud Continuum” — offers the best of both worlds: global intelligence and local autonomy.

12. Conclusion: The Decentralized Future Is Already Here

The movement from Cloud to Edge isn’t just an infrastructure upgrade — it’s a redefinition of how software interacts with the physical world.

By decentralizing computation, we make applications faster, smarter, and more human-centric.

We unlock a future where data doesn’t just travel to the cloud — the cloud comes to the data.

Developers who embrace this shift will lead the next revolution — building applications that live everywhere: in pockets, in vehicles, in cities, and even on satellites.

The edge isn’t the end of the cloud.

It’s the next frontier — where cloud intelligence meets real-world immediacy.

And it’s where the future of software development truly begins.